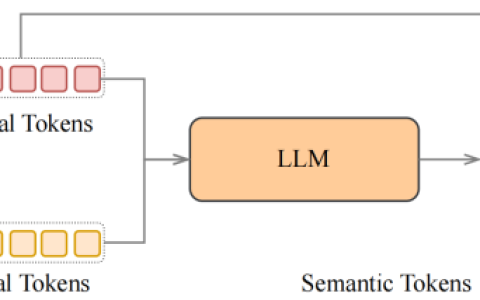

概述

能力

- 类似人类的语音:自然语调,情感和节奏,优于SOTA封闭源模型

- 零拍的语音克隆:克隆声音而无需以前的微调

- 引导的情感和语调:带有简单标签的控制语音和情感特征

- 低延迟:〜200ms的实时应用程序流延迟,可降低至〜100ms,并使用输入流。

流推理示例

克隆这个仓库

git clone https://github.com/canopyai/Orpheus-TTS.git导航和安装软件包

cd Orpheus-TTS && pip install orpheus-speech # uses vllm under the hood for fast inferencepip install vllm==0.7.3VLLM在3月18日推出了一个版本,因此通过恢复到后来 解决了一些错误

pip install orpheus-speech在下面运行以下示例:

from orpheus_tts import OrpheusModelimport waveimport timemodel = OrpheusModel(model_name ="canopylabs/orpheus-tts-0.1-finetune-prod")prompt = '''Man, the way social media has, um, completely changed how we interact is just wild, right? Like, we're all connected 24/7 but somehow people feel more alone than ever. And don't even get me started on how it's messing with kids' self-esteem and mental health and whatnot.'''start_time = time.monotonic()syn_tokens = model.generate_speech(prompt=prompt,voice="tara",)with wave.open("output.wav", "wb") as wf:wf.setnchannels(1)wf.setsampwidth(2)wf.setframerate(24000)total_frames = 0chunk_counter = 0for audio_chunk in syn_tokens: # output streamingchunk_counter += 1frame_count = len(audio_chunk) // (wf.getsampwidth() * wf.getnchannels())total_frames += frame_countwf.writeframes(audio_chunk)duration = total_frames / wf.getframerate()end_time = time.monotonic()print(f"It took {end_time - start_time} seconds to generate {duration:.2f} seconds of audio")

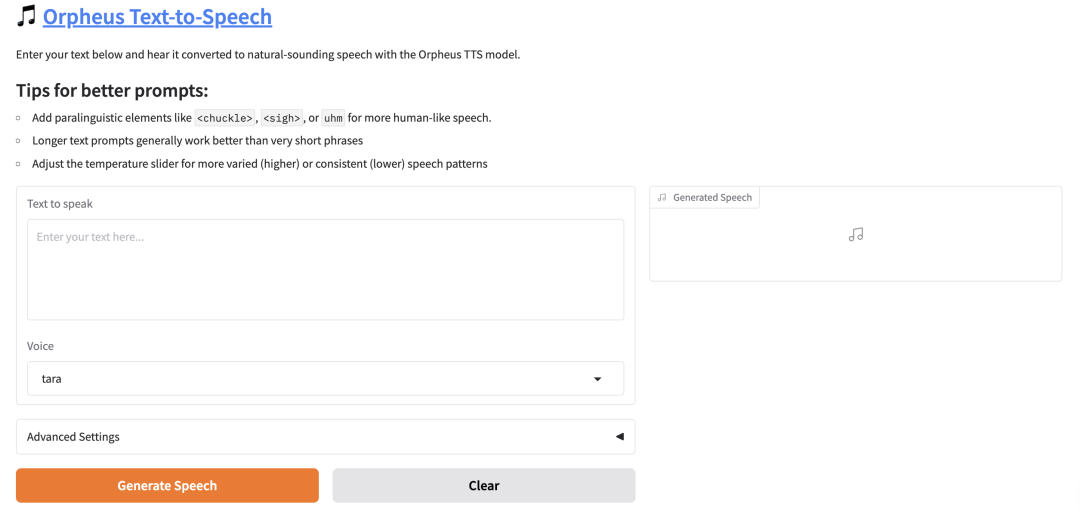

https://huggingface.co/spaces/MohamedRashad/Orpheus-TTS